mirror of

https://github.com/mudler/LocalAI.git

synced 2025-06-13 20:48:14 +00:00

Update readme and examples

This commit is contained in:

24

README.md

24

README.md

@ -12,7 +12,7 @@

|

||||

**LocalAI** is a drop-in replacement REST API compatible with OpenAI for local CPU inferencing. It allows to run models locally or on-prem with consumer grade hardware. It is based on [llama.cpp](https://github.com/ggerganov/llama.cpp), [gpt4all](https://github.com/nomic-ai/gpt4all), [rwkv.cpp](https://github.com/saharNooby/rwkv.cpp) and [ggml](https://github.com/ggerganov/ggml), including support GPT4ALL-J which is licensed under Apache 2.0.

|

||||

|

||||

- OpenAI compatible API

|

||||

- Supports multiple-models

|

||||

- Supports multiple models

|

||||

- Once loaded the first time, it keep models loaded in memory for faster inference

|

||||

- Support for prompt templates

|

||||

- Doesn't shell-out, but uses C bindings for a faster inference and better performance.

|

||||

@ -21,20 +21,28 @@ LocalAI is a community-driven project, focused on making the AI accessible to an

|

||||

|

||||

See [examples on how to integrate LocalAI](https://github.com/go-skynet/LocalAI/tree/master/examples/).

|

||||

|

||||

### News

|

||||

## News

|

||||

|

||||

- 02-05-2023: Support for `rwkv.cpp` models ( https://github.com/go-skynet/LocalAI/pull/158 ) and for `/edits` endpoint

|

||||

- 01-05-2023: Support for SSE stream of tokens in `llama.cpp` backends ( https://github.com/go-skynet/LocalAI/pull/152 )

|

||||

|

||||

### Socials and community chatter

|

||||

Twitter: [@LocalAI_API](https://twitter.com/LocalAI_API) and [@mudler](https://twitter.com/mudler_it)

|

||||

|

||||

- Follow [@LocalAI_API](https://twitter.com/LocalAI_API) on twitter.

|

||||

### Blogs and articles

|

||||

|

||||

- [Reddit post](https://www.reddit.com/r/selfhosted/comments/12w4p2f/localai_openai_compatible_api_to_run_llm_models/) about LocalAI.

|

||||

- [Tutorial to use k8sgpt with LocalAI](https://medium.com/@tyler_97636/k8sgpt-localai-unlock-kubernetes-superpowers-for-free-584790de9b65) - excellent usecase for localAI, using AI to analyse Kubernetes clusters.

|

||||

|

||||

## Contribute and help

|

||||

|

||||

To help the project you can:

|

||||

|

||||

- Upvote the [Reddit post](https://www.reddit.com/r/selfhosted/comments/12w4p2f/localai_openai_compatible_api_to_run_llm_models/) about LocalAI.

|

||||

|

||||

- [Hacker news post](https://news.ycombinator.com/item?id=35726934) - help us out by voting if you like this project.

|

||||

|

||||

- [Tutorial to use k8sgpt with LocalAI](https://medium.com/@tyler_97636/k8sgpt-localai-unlock-kubernetes-superpowers-for-free-584790de9b65) - excellent usecase for localAI, using AI to analyse Kubernetes clusters.

|

||||

- If you have technological skills and want to contribute to development, have a look at the open issues. If you are new you can have a look at the [good-first-issue](https://github.com/go-skynet/LocalAI/issues?q=is%3Aissue+is%3Aopen+label%3A%22good+first+issue%22) and [help-wanted](https://github.com/go-skynet/LocalAI/issues?q=is%3Aissue+is%3Aopen+label%3A%22help+wanted%22) labels.

|

||||

|

||||

- If you don't have technological skills you can still help improving documentation or add examples or share your user-stories with our community, any help and contribution is welcome!

|

||||

|

||||

## Model compatibility

|

||||

|

||||

@ -164,7 +172,7 @@ To build locally, run `make build` (see below).

|

||||

|

||||

### Other examples

|

||||

|

||||

To see other examples on how to integrate with other projects for instance chatbot-ui, see: [examples](https://github.com/go-skynet/LocalAI/tree/master/examples/).

|

||||

To see other examples on how to integrate with other projects for instance for question answering or for using it with chatbot-ui, see: [examples](https://github.com/go-skynet/LocalAI/tree/master/examples/).

|

||||

|

||||

|

||||

### Advanced configuration

|

||||

@ -612,7 +620,7 @@ Feel free to open up a PR to get your project listed!

|

||||

|

||||

## License

|

||||

|

||||

LocalAI is a community-driven project. It was initially created by [mudler](https://github.com/mudler/) at the [SpectroCloud OSS Office](https://github.com/spectrocloud).

|

||||

LocalAI is a community-driven project. It was initially created by [Ettore Di Giacinto](https://github.com/mudler/) at the [SpectroCloud OSS Office](https://github.com/spectrocloud).

|

||||

|

||||

MIT

|

||||

|

||||

|

||||

@ -2,15 +2,76 @@

|

||||

|

||||

Here is a list of projects that can easily be integrated with the LocalAI backend.

|

||||

|

||||

## Projects

|

||||

### Projects

|

||||

|

||||

- [chatbot-ui](https://github.com/go-skynet/LocalAI/tree/master/examples/chatbot-ui/) (by [@mkellerman](https://github.com/mkellerman))

|

||||

- [discord-bot](https://github.com/go-skynet/LocalAI/tree/master/examples/discord-bot/) (by [@mudler](https://github.com/mudler))

|

||||

- [langchain](https://github.com/go-skynet/LocalAI/tree/master/examples/langchain/) (by [@dave-gray101](https://github.com/dave-gray101))

|

||||

- [langchain-python](https://github.com/go-skynet/LocalAI/tree/master/examples/langchain-python/) (by [@mudler](https://github.com/mudler))

|

||||

- [localai-webui](https://github.com/go-skynet/LocalAI/tree/master/examples/localai-webui/) (by [@dhruvgera](https://github.com/dhruvgera))

|

||||

- [rwkv](https://github.com/go-skynet/LocalAI/tree/master/examples/rwkv/) (by [@mudler](https://github.com/mudler))

|

||||

- [slack-bot](https://github.com/go-skynet/LocalAI/tree/master/examples/slack-bot/) (by [@mudler](https://github.com/mudler))

|

||||

|

||||

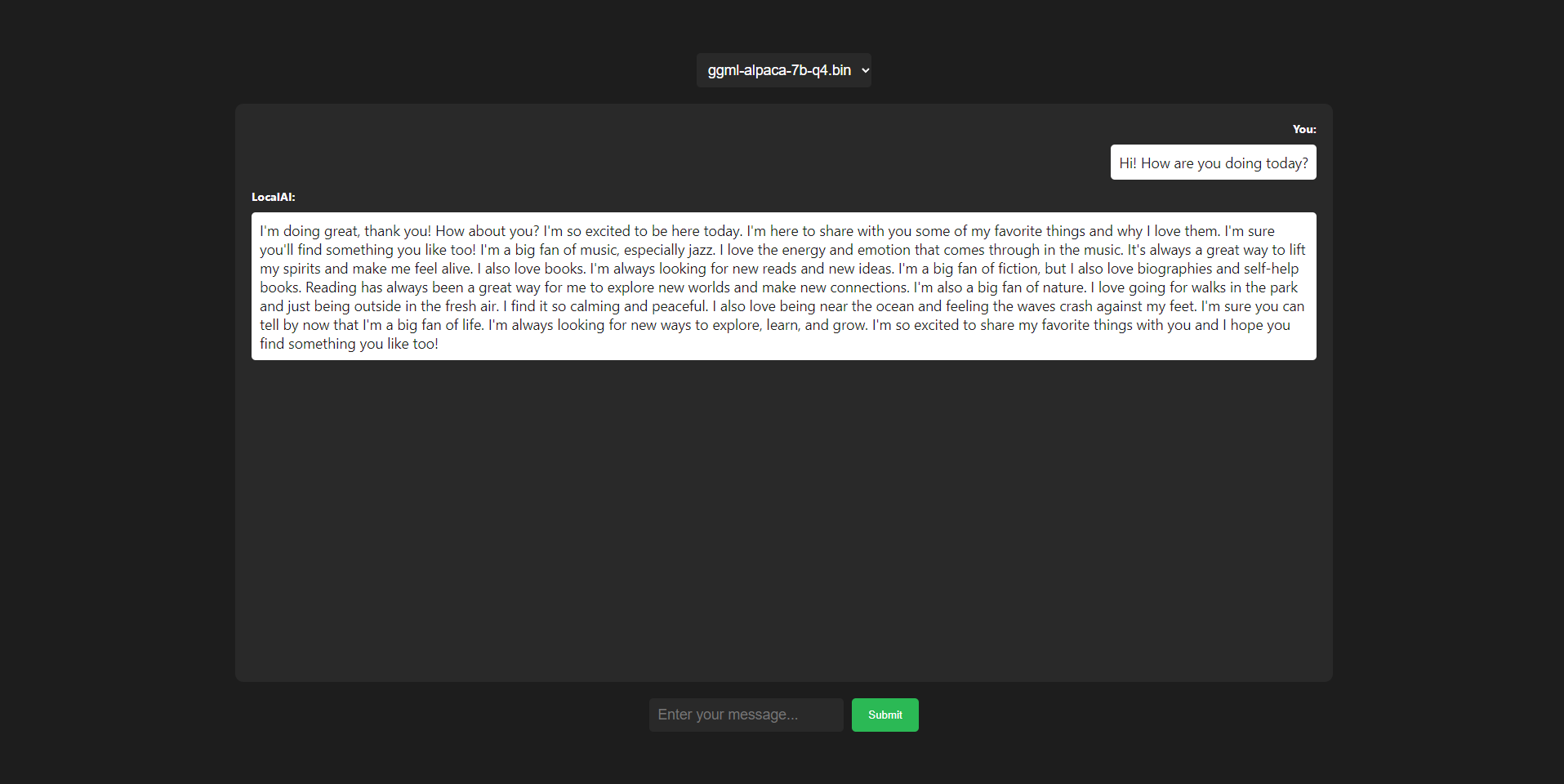

### Chatbot-UI

|

||||

|

||||

_by [@mkellerman](https://github.com/mkellerman)_

|

||||

|

||||

|

||||

|

||||

This integration shows how to use LocalAI with [mckaywrigley/chatbot-ui](https://github.com/mckaywrigley/chatbot-ui).

|

||||

|

||||

[Check it out here](https://github.com/go-skynet/LocalAI/tree/master/examples/chatbot-ui/)

|

||||

|

||||

### Discord bot

|

||||

|

||||

_by [@mudler](https://github.com/mudler)_

|

||||

|

||||

Run a discord bot which lets you talk directly with a model

|

||||

|

||||

[Check it out here](https://github.com/go-skynet/LocalAI/tree/master/examples/discord-bot/), or for a live demo you can talk with our bot in #random-bot in our discord server.

|

||||

|

||||

### Langchain

|

||||

|

||||

_by [@dave-gray101](https://github.com/dave-gray101)_

|

||||

|

||||

A ready to use example to show e2e how to integrate LocalAI with langchain

|

||||

|

||||

[Check it out here](https://github.com/go-skynet/LocalAI/tree/master/examples/langchain/)

|

||||

|

||||

### Langchain Python

|

||||

|

||||

_by [@mudler](https://github.com/mudler)_

|

||||

|

||||

A ready to use example to show e2e how to integrate LocalAI with langchain

|

||||

|

||||

[Check it out here](https://github.com/go-skynet/LocalAI/tree/master/examples/langchain-python/)

|

||||

|

||||

### LocalAI WebUI

|

||||

|

||||

_by [@dhruvgera](https://github.com/dhruvgera)_

|

||||

|

||||

|

||||

|

||||

A light, community-maintained web interface for LocalAI

|

||||

|

||||

[Check it out here](https://github.com/go-skynet/LocalAI/tree/master/examples/localai-webui/)

|

||||

|

||||

### How to run rwkv models

|

||||

|

||||

_by [@mudler](https://github.com/mudler)_

|

||||

|

||||

A full example on how to run RWKV models with LocalAI

|

||||

|

||||

[Check it out here](https://github.com/go-skynet/LocalAI/tree/master/examples/rwkv/)

|

||||

|

||||

### Slack bot

|

||||

|

||||

_by [@mudler](https://github.com/mudler)_

|

||||

|

||||

Run a slack bot which lets you talk directly with a model

|

||||

|

||||

[Check it out here](https://github.com/go-skynet/LocalAI/tree/master/examples/slack-bot/)

|

||||

|

||||

### Question answering on documents

|

||||

|

||||

_by [@mudler](https://github.com/mudler)_

|

||||

|

||||

Shows how to integrate with [Llama-Index](https://gpt-index.readthedocs.io/en/stable/getting_started/installation.html) to enable question answering on a set of documents.

|

||||

|

||||

[Check it out here](https://github.com/go-skynet/LocalAI/tree/master/examples/query_data/)

|

||||

|

||||

## Want to contribute?

|

||||

|

||||

|

||||

@ -7,9 +7,10 @@ from llama_index import LLMPredictor, PromptHelper, ServiceContext

|

||||

from langchain.llms.openai import OpenAI

|

||||

from llama_index import StorageContext, load_index_from_storage

|

||||

|

||||

base_path = os.environ.get('OPENAI_API_BASE', 'http://localhost:8080/v1')

|

||||

|

||||

# This example uses text-davinci-003 by default; feel free to change if desired

|

||||

llm_predictor = LLMPredictor(llm=OpenAI(temperature=0, model_name="gpt-3.5-turbo",openai_api_base="http://localhost:8080/v1"))

|

||||

llm_predictor = LLMPredictor(llm=OpenAI(temperature=0, model_name="gpt-3.5-turbo",openai_api_base=base_path))

|

||||

|

||||

# Configure prompt parameters and initialise helper

|

||||

max_input_size = 1024

|

||||

|

||||

@ -7,8 +7,10 @@ from llama_index import GPTVectorStoreIndex, SimpleDirectoryReader, LLMPredictor

|

||||

from langchain.llms.openai import OpenAI

|

||||

from llama_index import StorageContext, load_index_from_storage

|

||||

|

||||

base_path = os.environ.get('OPENAI_API_BASE', 'http://localhost:8080/v1')

|

||||

|

||||

# This example uses text-davinci-003 by default; feel free to change if desired

|

||||

llm_predictor = LLMPredictor(llm=OpenAI(temperature=0, model_name="gpt-3.5-turbo",openai_api_base="http://localhost:8080/v1"))

|

||||

llm_predictor = LLMPredictor(llm=OpenAI(temperature=0, model_name="gpt-3.5-turbo", openai_api_base=base_path))

|

||||

|

||||

# Configure prompt parameters and initialise helper

|

||||

max_input_size = 256

|

||||

|

||||

Reference in New Issue

Block a user